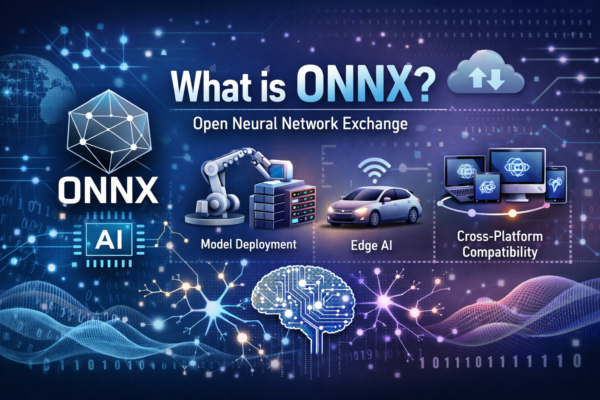

In Part 1 of this series, I focused on ONNX itself—what it is, why it exists, and how it enables interoperability between machine learning frameworks.

Part 2 continues that journey by shifting the focus from model representation to model execution. ONNX Runtime is the component that turns a standardized model into something that can run efficiently, reliably, and at scale across very different environments. Understanding it is essential if you want to understand modern AI deployment, even if you are already using it without realizing it.

From ONNX to ONNX Runtime: The Missing Execution Layer

ONNX defines a common language for machine learning models. It standardizes how a model is described, independent of whether it was trained in PyTorch, TensorFlow, or another framework. However, a model description alone does not solve the problem of execution. At some point, that graph of operators must be evaluated efficiently on real hardware.

This is where ONNX Runtime enters the picture. ONNX Runtime is a high-performance inference engine designed to load ONNX models and execute them efficiently across a wide range of platforms and hardware configurations. Conceptually, if ONNX answers the question “What does this model look like?”, ONNX Runtime answers “How do we run it fast, correctly, and everywhere?”

Seen through this lens, ONNX Runtime is not an optional add-on. It is the execution layer that makes ONNX models practical in production systems.

The Problem ONNX Runtime Solves

Deploying machine learning models in the real world has historically been fragmented and complex. A model might be trained on a powerful GPU-equipped workstation, tested on a laptop, and ultimately deployed to a cloud service, an edge device, or even a web browser. Each of these environments has different constraints, hardware capabilities, and performance expectations.

Without a unifying runtime, teams often had to rewrite inference logic, switch libraries, or accept inconsistent performance characteristics across platforms. This not only increased engineering effort but also introduced risk and unpredictability.

ONNX Runtime addresses this problem by providing a single, consistent inference engine that abstracts away much of this complexity. It allows the same ONNX model to be executed across environments while still taking advantage of platform-specific optimizations. As a result, developers can focus on model quality and application logic rather than reinventing inference for every deployment target.

What ONNX Runtime Is Used For in Practice

At its core, ONNX Runtime is built for inference. It is optimized for running trained models efficiently rather than for training them. This distinction is important, because inference has very different requirements: low latency, predictable performance, efficient memory usage, and stable behavior across environments.

In scenarios such as local AI inference—like those enabled by Foundry Local—these characteristics become even more critical. Running models locally often means operating under tighter resource constraints while still delivering responsive user experiences. ONNX Runtime is designed precisely for this space, making it a natural fit for applications that need fast, local, and private inference.

ONNX Runtime Inside Microsoft Products (Often Invisibly)

One of the reasons ONNX Runtime generates so many questions is that it is frequently used as an internal component rather than a product developers explicitly select. Many Microsoft tools, platforms, and SDKs embed ONNX Runtime as their inference engine. As a result, developers and end users benefit from its performance and portability without necessarily being aware of its presence.

This invisibility is a sign of maturity rather than obscurity. ONNX Runtime is infrastructure software. When it works well, it fades into the background, quietly enabling consistent AI behavior across products and platforms. The questions raised at the meetup were less about unfamiliarity and more about recognition—people had already been relying on it.

Core Benefits: Performance, Portability, and Predictability

The value of ONNX Runtime emerges most clearly when looking at its benefits as outcomes rather than features. Performance is achieved through aggressive graph optimizations, efficient operator implementations, and tight integration with hardware acceleration. Portability follows naturally from its cross-platform design, allowing the same model to run on different operating systems and device classes without modification. Predictability comes from consistent execution semantics, which reduce surprises when moving models from development to production.

These benefits reinforce one another. High performance would be less valuable without portability, and portability would be risky without predictable behavior. ONNX Runtime is designed to balance all three, which explains its broad adoption across diverse deployment scenarios.

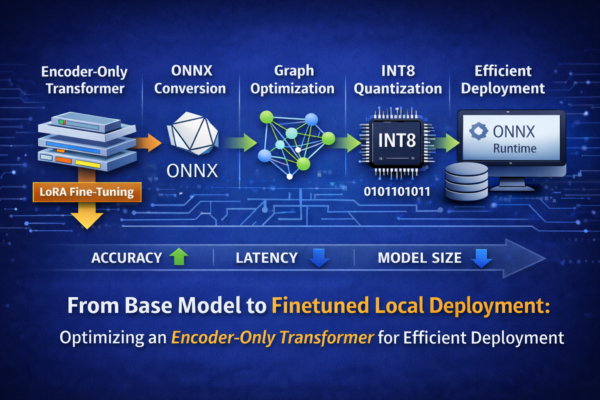

Quantization and Model Optimization

One practical example of ONNX Runtime’s optimization capabilities is quantization. Quantization is the process of reducing the numerical precision of model parameters and operations, for example by moving from 32-bit floating point values to 8-bit integers. This typically results in smaller model sizes and faster inference, often with minimal impact on accuracy.

ONNX Runtime supports quantization as part of its optimization toolchain, making it easier to prepare models for constrained environments such as edge devices or local execution on consumer hardware. In these scenarios, reduced memory footprint and improved performance can be the difference between a viable solution and an impractical one. Quantization is therefore not an academic exercise; it is a practical enabler for real-world deployments.

Where ONNX Runtime Runs: From Edge to Browser

One of the defining characteristics of ONNX Runtime is the breadth of environments it supports. It can run on servers and laptops, on edge devices with limited resources, and even inside web browsers. This flexibility aligns well with modern application architectures, where inference may happen close to the user for latency, privacy, or cost reasons.

Rather than forcing developers to choose different runtimes for different targets, ONNX Runtime provides a unified approach. The same model and largely the same code paths can be reused across deployment scenarios, reducing operational complexity and long-term maintenance costs.

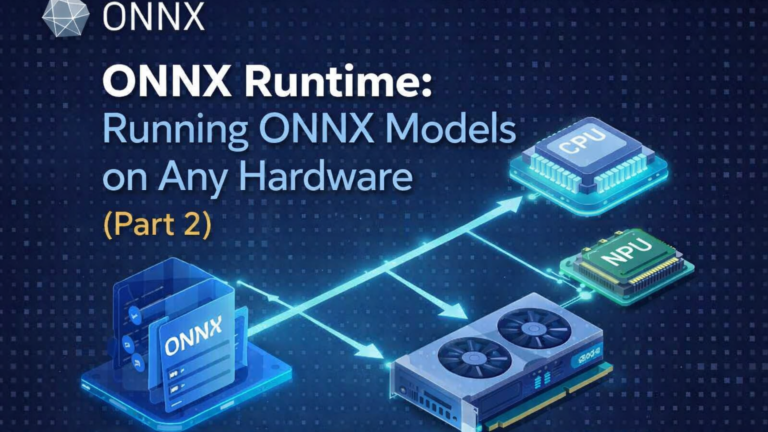

Execution Providers: How Hardware Acceleration Works

The mechanism that enables this adaptability is the concept of Execution Providers, often referred to as EPs. Execution Providers allow ONNX Runtime to delegate parts of a model’s execution to specific hardware backends, such as CPUs, GPUs, or specialized accelerators, while maintaining a consistent runtime interface.

From a developer’s perspective, this means that hardware acceleration can often be enabled through configuration rather than code changes. The runtime determines which parts of the model can be executed on which provider, balancing performance and compatibility. This design is central to ONNX Runtime’s ability to scale across devices and explains why it integrates so well into platforms like Foundry Local.

Summary of supported Execution Providers

| CPU | GPU | IoT/Edge/Mobile | Other |

|---|---|---|---|

| Default CPU | NVIDIA CUDA | Intel OpenVINO | Rockchip NPU (preview) |

| Intel DNNL | NVIDIA TensorRT | Arm Compute Library (preview) | Xilinx Vitis-AI (preview) |

| TVM (preview) | DirectML | Android Neural Networks API | Huawei CANN (preview) |

| Intel OpenVINO | AMD MIGraphX | Arm NN (preview) | AZURE (preview) |

| XNNPACK | Intel OpenVINO | CoreML (preview) | |

| AMD ROCm(deprecated) | Qualcomm QNN | XNNPACK | |

| Summary of supported Execution Providers | |||

The table above illustrates how ONNX Runtime maps a single ONNX model to a wide range of hardware and deployment environments through its Execution Provider architecture. Execution Providers act as interchangeable backends that allow the runtime to target different CPUs, GPUs, accelerators, and specialized devices without changing the model itself.

CPU-based providers form the universal baseline and ensure that models can run anywhere, while optimized variants improve performance on modern processors. GPU Execution Providers focus on high-throughput and low-latency scenarios by integrating with vendor-specific acceleration stacks. In contrast, IoT, edge, and mobile providers prioritize efficiency and footprint, enabling inference on resource-constrained devices such as embedded systems and smartphones.

The presence of community-maintained and preview providers highlights the extensibility of ONNX Runtime. New hardware platforms can be supported without fragmenting the inference stack, as ONNX Runtime coordinates execution across providers and falls back gracefully when needed. This design is what allows the same model to run consistently from edge devices to powerful servers and even within web-based environments.

Conclusion: Closing the Gap from the Meetup

This post set out to answer a simple question that emerged from a meetup discussion: what exactly is ONNX Runtime, and why does it matter? The answer is that ONNX Runtime is the execution engine that turns ONNX models into practical, performant, and portable AI solutions.

Part 1 of this series explained how ONNX standardizes model representation. Part 2 completes the picture by showing how ONNX Runtime operationalizes those models across environments, often invisibly but always critically. Together, they form the foundation for modern AI deployment, whether in the cloud, on the edge, or directly on a user’s device.

Understanding ONNX Runtime is not just about knowing another component in the stack; it is about understanding how AI systems move from theory to reality.