In Part 1 of this series, we explored what ONNX is, why it was created, and how it decouples model training from deployment by providing a standardized, framework-agnostic model format. That foundation is essential for understanding the next step in the ONNX ecosystem.

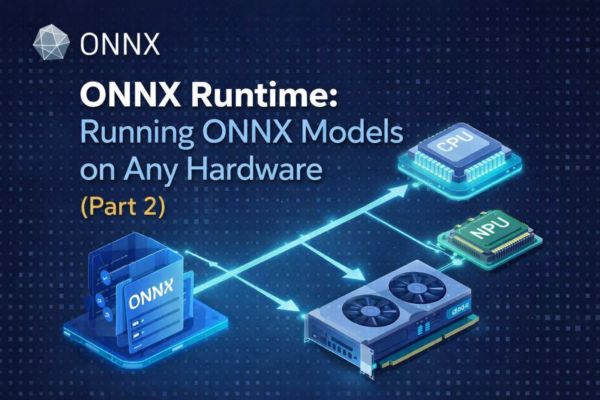

In this article, we focus on ONNX Runtime—the execution engine that brings ONNX models to life. You will learn how ONNX Runtime loads and optimizes ONNX models, selects the appropriate execution provider, and runs inference efficiently across CPUs, GPUs, and NPUs.